Building a two-node IBM GPFS cluster on IBM AIX

Step-by-step installation and configuration

Overview

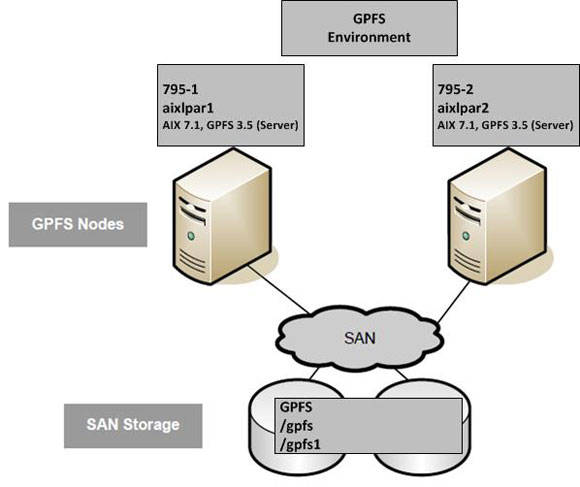

The purpose of this article is to provide a step-by-step guide for installing and configuring a simple two-node GPFS cluster on AIX. The following diagram provides a visual representation of the cluster configuration.

Figure 1. Visual representation of the cluster configuration

GPFS

GPFS provides a true “shared file system” capability, with excellent performance and scalability. GPFS allows concurrent access for a group of computers to a common set of file data over a common storage area network (SAN) infrastructure, a network, or a mix of connection types. GPFS provides storage management, information lifecycle management tools, and centralized administration and allows for shared access to file systems from remote GPFS clusters providing a global namespace.

GPFS offers data tiering, replication, and many other advanced features. The configuration can be as simple or complex as you want.

Preparing the AIX environment for GPFS

We’ll assume that you have already purchased the necessary licenses and software for GPFS. With a copy of the GPFS software available, copy the GPFS file sets to each of the AIX nodes on which you need to run GPFS.

In this article, each partition was built with AIX version 7.1, Technology Level 2, Service Pack 1:

Each AIX system is configured with seven SAN disks. One disk is used for the AIX operating system (rootvg) and the remaining six disks are used by GPFS.

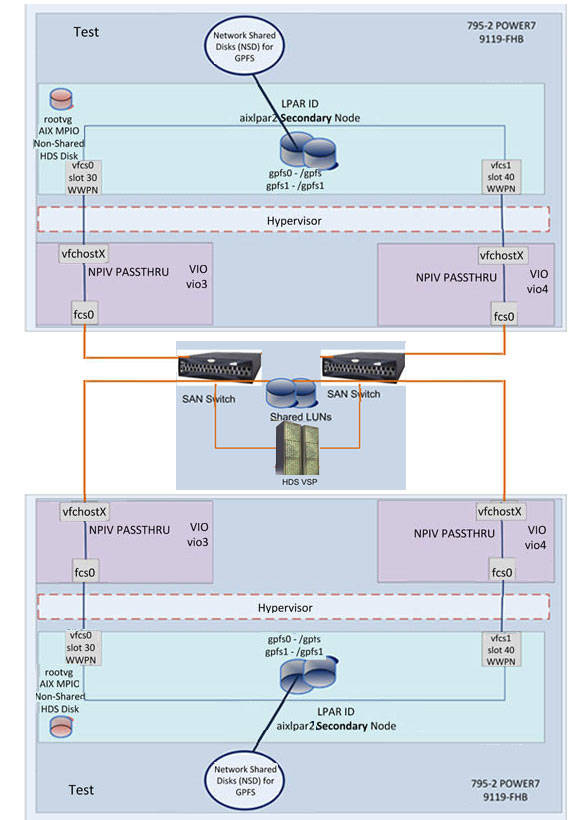

The SAN disks (to be used with GPFS) are assigned to both nodes (that is, they are shared between both partitions). Both AIX partitions are configured with virtual Fibre Channel adapters and access their shared storage through the SAN, as shown in the following figure.

Figure 2. Deployment diagram

The following attributes, shown in the table below, were changed for each hdisk, using the chdev command.

| AIX device name | Size in GB | AIX disk device type | Algorithm | queue_depth | reserve_policy |

|---|---|---|---|---|---|

| hdisk0 | 50 | Hitachi MPIO Disk VSP | round_robin | 32 | no_reserve |

| hdisk1 | 50 | Hitachi MPIO Disk VSP | round_robin | 32 | no_reserve |

| hdisk2 | 50 | Hitachi MPIO Disk VSP | round_robin | 32 | no_reserve |

| hdisk3 | 50 | Hitachi MPIO Disk VSP | round_robin | 32 | no_reserve |

| hdisk4 | 50 | Hitachi MPIO Disk VSP | round_robin | 32 | no_reserve |

| hdisk5 | 50 | Hitachi MPIO Disk VSP | round_robin | 32 | no_reserve |

| hdisk6 | 50 | Hitachi MPIO Disk VSP | round_robin | 32 | no_reserve |

The lsattr command can be used to verify that each attribute is set to the correct value:

The next step is to configure Secure Shell (SSH) so that both nodes can communicate with each other. When building a GPFS cluster, you must ensure that the nodes in the cluster have SSH configured correctly so that they do not require password authentication. This requires the configuration of Rivest-Shamir-Adleman algorithm (RSA) key pairs for the root users SSH configuration. This configuration needs to be configured in both directions, to all nodes in the GPFS cluster.

The mm

commands in GPFS require authentication in order for them to work. If

the keys are not configured correctly, the commands will prompt for the

root password each time and the GPFS cluster might fail. A good way to

test this is to ensure that the ssh command can work unhindered by a request for the roots password.

You can refer to the step-by-step guide for configuring SSH keys on AIX:

You can confirm that the nodes can communicate with each other (unhindered) using SSH with the following commands on each node:

With SSH working, configure the WCOLL (Working Collective) environment variable for the root user. For example, create a text file that lists each of the nodes, one per line:

Copy the node file to all nodes in the cluster.

Add the following entry to the root users .kshrc file. This will allow the root user to execute commands on all nodes in the GPFS cluster using the dshor mmdshcommands.

The root users PATH should be modified to ensure that all GPFS mm commands are available to the system administrator. Add the following entry to the root user’s .kshrc file.

The /etc/hosts file should be consistent across all nodes in the GPFS cluster. Each IP address for each node must be added to/etc/hosts on each cluster node. This is recommended, even when Domain Name System (DNS) is configured on each node. For example:

Installing GPFS on AIX

Now that the AIX environment is configured, the next step is to install the GPFS software on each node. This is a very straightforward process.

We will install GPFS version 3.5 (base-level file sets) and then

apply the latest updates to bring the level up to 3.5.0.10. There are

only three file sets to install. You can use System Management Interface

Tool (SMIT) or the installp command to install the software.

Repeat this operation on the second node.

You can verify that the base-level GPFS file sets are installed by using the lslpp command:

The latest GPFS updates are installed next. Again, you can use SMIT (or installp) to update the file sets to the latest level. The lslpp command can be used to verify that the GPFS file sets have been updated.

Repeat the update on the second node.

Configuring the GPFS cluster

Now that GPFS is installed, we can create a cluster across both AIX systems. First, we create a text file that contains a list of each of the nodes and their GPFS description and purpose. We have chosen to configure each node as a GPFS quorum manager. Each node is a GPFS server. If you are unsure of how many quorum managers and GPFS servers are required in your environment, refer to the GPFS Concepts, Planning, and Installation document for guidance.

The cluster is created using the mmcrcluster command. The GPFS cluster name is GPFS_CLUSTER1. The primary node (or NSD server; discussed in the next section) is aixlpar1p and the secondary node is aixlpar2p. We have specified that ssh and *scp will be used for cluster communication and administration.

Note: To ensure

that GPFS daemon communication occurs over the private GPFS network,

during cluster creation, we specified the GPFS daemon node names (that

is, host names ending with p). There are two types of

communication to consider in a GPFS cluster, administrative commands and

daemon communication. Administrative commands use remote shell (ssh, rsh, or other) and socket-based communications. It is considered as a best practice to ensure that all GPFS daemon__communication is performed over a private network. Refer to the GPFS IBM Developer wiki for further information and discussion on GPFS network configuration considerations and practices.

To use a separate network for administration command communication, you can change the “Admin node name” using the mmchnode command. In this example, the separate network address is designated by “a” (for Administration) at the end of the node name, aixlpar1a for example.

The mmcrcluster command warned us that not all nodes have the appropriate GPFS license designation. We use the mmchlicense command to assign a GPFS server license to both the nodes in the cluster.

The cluster is now configured. The mmlscluster command can be used to display cluster information.

At this point, you can use the mmdsh

command to verify that the SSH communication is working as expected on

all GPFS nodes. This runs a command on all the nodes in the cluster. If

there is an SSH configuration problem, this command highlights the

issues.

Configuring Network Shared Disks

GPFS provides a block-level interface over TCP/IP networks called the Network Shared Disk (NSD) protocol. Whether using the NSD protocol or a direct attachment to the SAN, the mounted file system looks the same to the users and application (GPFS transparently handles I/O requests).

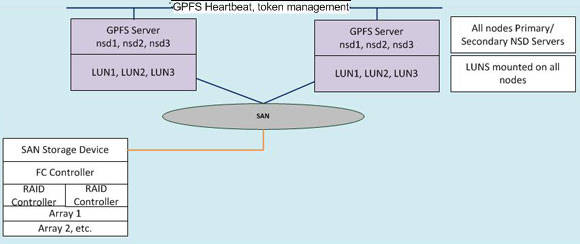

A shared disk cluster is the most basic environment. In this configuration, the storage is directly attached to all the systems in the cluster. The direct connection means that each shared block device is available concurrently to all of the nodes in the GPFS cluster. Direct access means that the storage is accessible using a Small Computer System Interface (SCSI) or other block-level protocol using a SAN.

The following figure illustrates a GPFS cluster where all nodes are connected to a common Fibre Channel SAN and storage device. The nodes are connected to the storage using the SAN and to each other using a local area network (LAN). Data used by applications running on the GPFS nodes flows over the SAN, and GPFS control information flows among the GPFS instances in the cluster over the LAN. This configuration is optimal when all nodes in the cluster need the highest performance access to the data.

Figure 3. Overview diagram of the GPFS cluster

The mmcrnsd

command is used to create NSD devices for GPFS. First, we create a text

file that contains a list of each of the hdisk names, their GPFS

designation (data, metadata, both*), and the NSD name.

Note: Refer to the GPFS Concepts, Planning, and Installation document for guidance on selecting NSD device usage types.

Then, run the mmcrnsd command to create the NSD devices.

The lspv command now shows the NSD name associated with each AIX hdisk.

The mmlsnsd

command displays information for each NSD, in particular which GPFS

file system is associated with each device. At this point, we have not

created a GPFS file system. So each disk is currently free. You’ll notice that under NSD servers each device is shown as directly attached. This is expected for SAN-attached disks.

GPFS file system configuration

Next, the GPFS file systems can be configured. The mmcrfs

command is used to create the file systems. We have chosen to create

two file systems; /gpfs and /gpfs1. The /gpfs (gpfs0) file system will

be configured with a GPFS block size of 256K (the default) and /gpfs1

(gpfs1) with a block size of 1M*. Both file systems are configured for

replication (-M2 –R2). The /tmp/cg/gpfs-disk.txt file is specified for

/gpfs and /tmp/cg/gpfs1-disk.txt for /gpfs1. These files specify which

NSD devices are used for each file system during creation.

Note: Choose your block size carefully. It is not possible to change this value after the GPFS device has been created.

The mmlsnsd

command displays the NSD configuration per file system. NSD devices 1

to 3 are assigned to the gpfs0 device and devices 4 to 6 are assigned to

gpfs1.

Both GPFS file systems are now available on both nodes.

The mmdsh command can be used here to quickly check the file system status on all the nodes.

If more detailed information is required, the mmdf command can be used.

Node quorum with tiebreaker disks

Tiebreaker disks are recommended when you have a two-node cluster or you have a cluster where all of the nodes are SAN-attached to a common set of logical unit numbers (LUNs) and you want to continue to serve data with a single surviving node. Typically, tiebreaker disks are only used in two-node clusters. Tiebreaker disks are not special NSDs; you can use any NSD as a tiebreaker disk.

In this example, we chose three (out of six) NSD devices as tiebreaker disks. We stopped GPFS on all nodes and configured the cluster accordingly.

Cluster daemon status

There are two GPFS daemons (processes) that remain active while GPFS is active (mmfsd64 and runmmfs).

You can use the mmgetstate command to view the status of the GPFS daemons on all the nodes in the cluster.

Summary

Congratulations! You’ve just configured your first GPFS cluster. In this article, you’ve learnt how to build a simple two-node GPFS cluster on AIX. This type of configuration can be easily deployed to support clustered workload with high availability requirements, for example an MQ multi-instance cluster. GPFS offers many configuration options; you can spend a lot of time planning for a GPFS cluster. If you are seriously considering a GPFS deployment, I encourage you to read all of the available GPFS documentation in the Resources section on the right.